Quantum computer and mining - what are the dangers for cryptocurrencies

Quantum computing and mining

The digital community is debating the threat of physical particle computing to blockchain algorithms. What changes new age hardware can bring to the cryptocurrency economy, and whether it is possible to combine quantum computing and mining - about this in a new article. We will also tell you how real the fears that PoW mining will cease to exist

What is a quantum computer?

Conventional machines use a binary system of computation. Any information to be processed is counted in bits and is in the form of a combination of the numbers 1 and 0. The difficulty in dealing with it is the bulkiness of the data. However, the moment you need to simulate many variants using a large number of variables, there is a crisis of performance. There are 4 unique combinations for 2 digits. In the case of finding all possible 10-digit variants for 10 variables - the search can take tens of months.

SHA-256 encryption hash is 32 characters long. Each combination of 32 variables must be searched in turn to decode it. Even using the combined power of a supercomputer, the process would take a billion years. This is why SHA-256 is considered safe. For now.

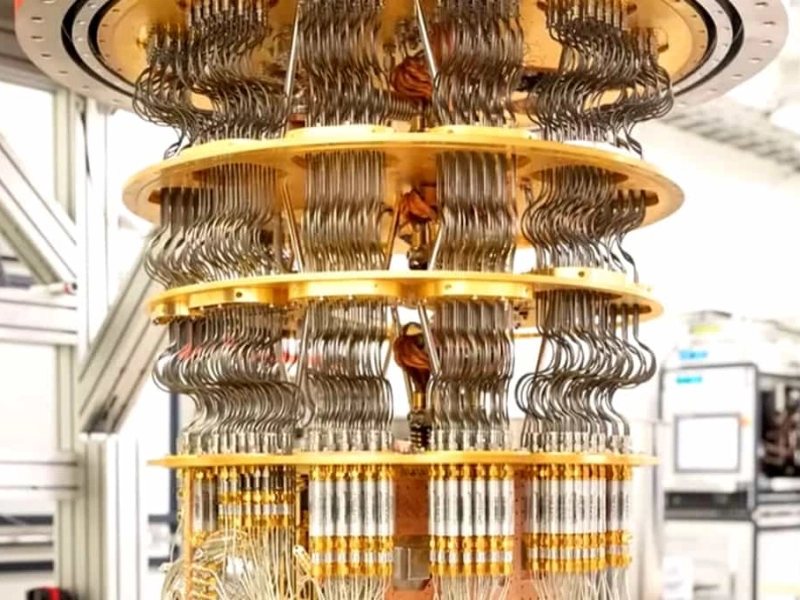

Quantum computing machine.

The 2000Q computer manufactured by D-Wave System

In contrast to the binary system, the quantum method has been developed. Theoretically, a powerful computer on physical particles can simulate variants for complex sequences in minutes that would take supercomputers hundreds of years.

Operating Principle

The quantum method doesn't use overprocessing. Instead of bits, qubits are taken as the unit of calculus. These are variables which have a value of 1 or 0 with a probability of 50%.

In other words, for a qubit, all choices are true and exist simultaneously in the same reality of computation.

If the answers are already there, one has to understand what the computations take time to do. In order to extract the result, one needs to apply an interpretation algorithm. Translating information from quantum computer (QC) space into comprehensible form takes time.

Facilities for such calculations are under development. In parallel, researchers in quantum physics are trying to increase the speed of interpretation algorithms. Work in this area takes place in all major research universities, such as MIT (USA) and the leading commercial fintech labs of IBM and Google.

Date of appearance.

In 1980, the American researcher Paul Benioff and the Soviet physicist Yuri Manin published the first papers related to the theory of quantum computing. Its creator was the Nobel Physics Laureate Richard Feynman. Further theoretical developments were conducted for more than 10 years. However, practical shifts began after the creation of the number factorization algorithm described by Shore in 1994.